Pan-tilt face tracking gimbal with audio functionality

- CategoryClass project

- Project dateSpring 2023

- Skills usedLaser cutting, Python, OpenCV, PCB design in Altium

- Github repository

About this project

For my final project for 2.679, Electronics for Mechanical Systems II, I wanted to implement what I had learned in computer vision into a pan-tilt gimbal that does face tracking. Eventually, we hope to integrate this system into a full-scale WALL-E robot during our senior year. The hope is that WALL-E would be able to run around and follow a person.

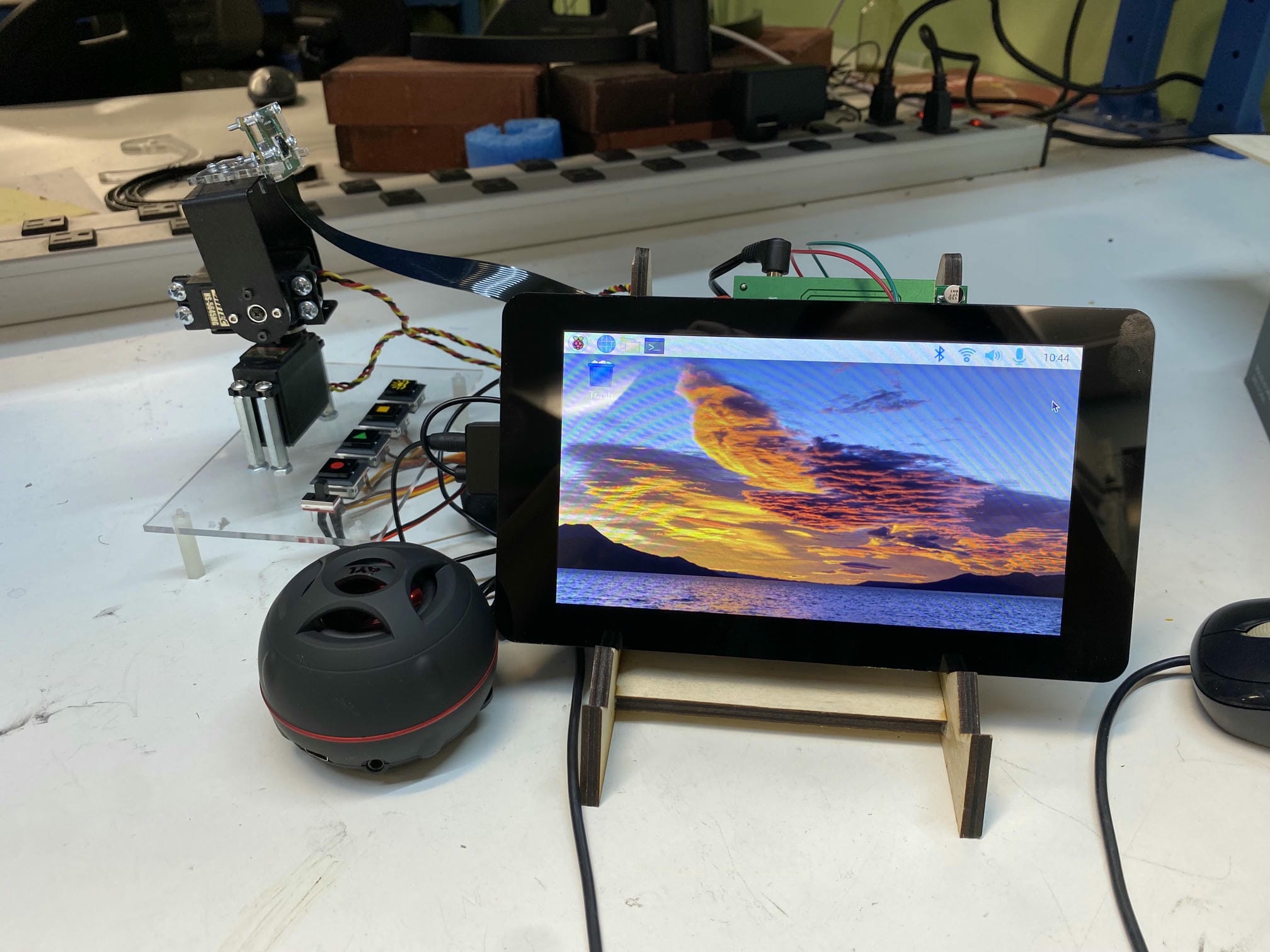

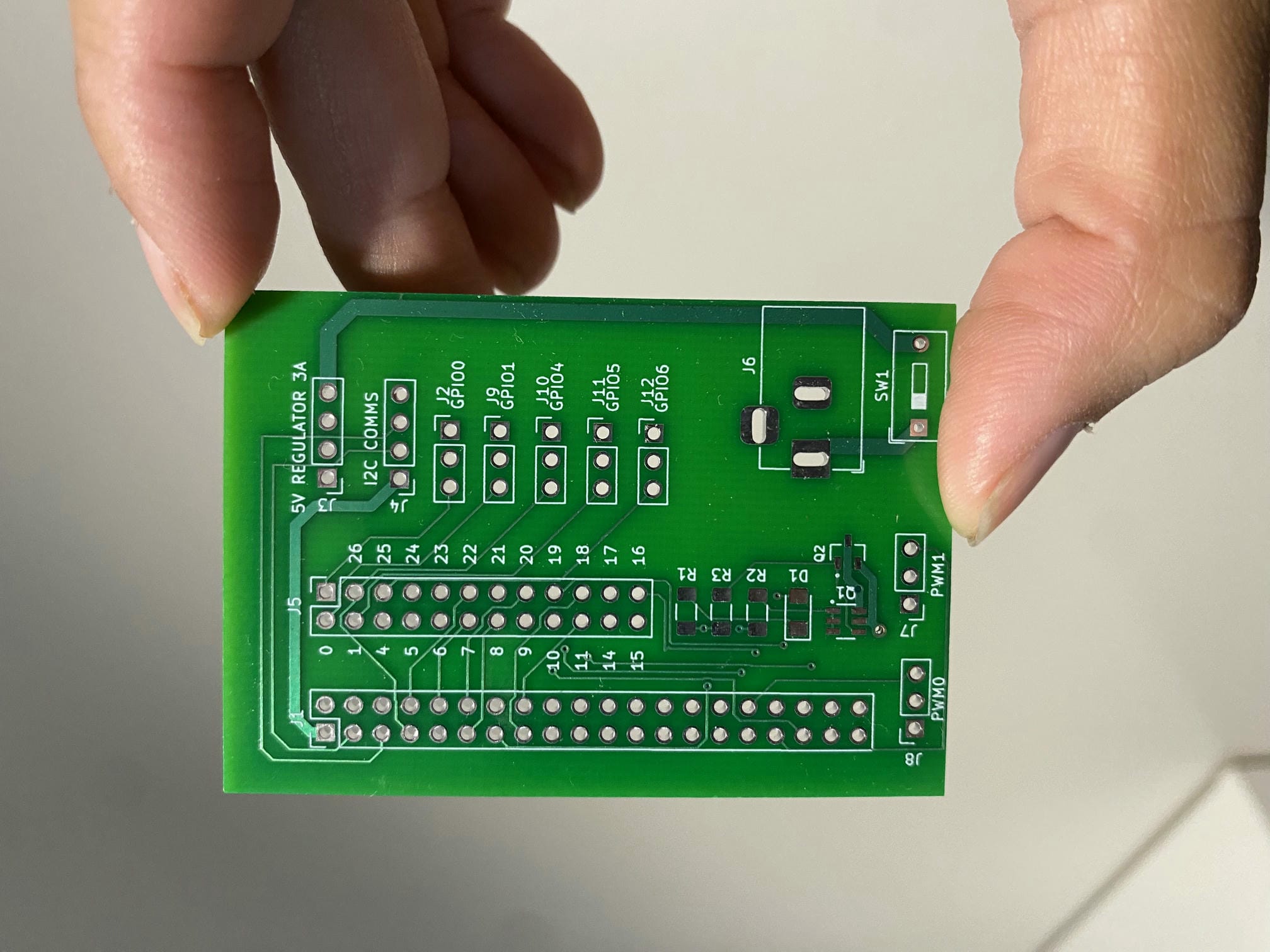

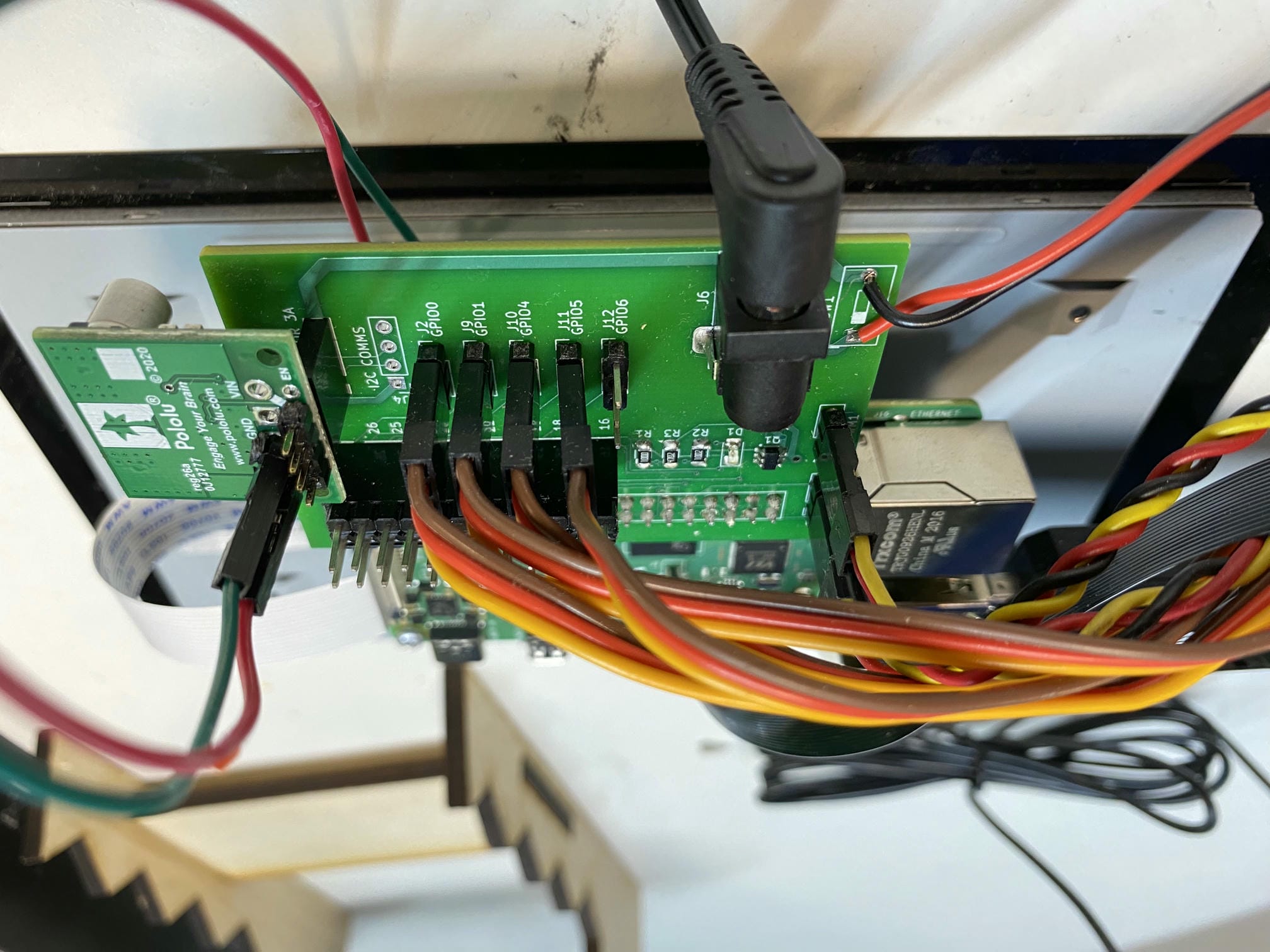

I first built a pan tilt gimbal using two servos and brackets, which I then mounted to an acrylic plate. I attached a camera to the top of the gimbal using a laser cut acrylic piece. The main “brains” of my system is a Raspberry Pi 4 and my custom PCB shield I designed in Altium. The PCB allows me to directly plug in my servos into the Pi since the board also pulls the two PWM pins and ground to the header.

I also added several ports for buttons to control audio playback. There were 5 ports in total, for record, pause, play, solar, and an extra button, as seen in the movie. I vinyl cut some stickers to add to my momentary switches to make the labeling clear, and embedded each of the switches into an acrylic control board.

Importantly, I wanted the entire system to be powered remotely without needing to remain plugged into the wall. There are existing modules such as PyJuice you can purchase, but this would mechanically interfere with my custom PCB (and they’re also pretty expensive), so I thought I might as well integrate it into the PCB directly. In order to do so, I added a zero-voltage diode and port for a Pololu step down converter, so the system could be powered off of AA batteries. The buck converter would convert the 9V to 5V and also provide ports for 5V and ground to power a touchscreen. This touch screen would enable people to play infinite songs, and in future iterations, display battery life of WALL-E.

On the software end, this system makes use of OpenCV2. When the camera sees a person, it rotates the servos to try its best to keep the face in the center of the frame. If a face isn’t detected for a while, it goes into discovery mode and will start looking around in search of one. In the event it sees multiple faces, it will try to move to the average center of all the faces. This was nontrivial to program, and took me a long time to tune.

In order to detect when buttons were pressed and released, I used gpiozero's button function. For audio playback, I found it was pretty tricky to implement recording for an unknown time, since most existing libraries have you specify the time to record when you call the function. To get around this, I needed to use the non-blocking streaming version of PyAudio using callback functions. I also used pygame mixer to do something similar for audio playback, where the sound is only played repeatedly until the button is released. If multiple buttons are pressed at once, then they overlay one another.

Lastly, I needed to learn how to SSH into the Pi so I wouldn't need to be connected to the Pi with my laptop at all times.

Overall, this project was a whole lot of fun. It’s my first computer vision project, and I had a ton of fun with the controls and playback!

More about 2.679

Here's a video that MIT MechE made about the class! The video shows my gimbal in action.